Reading Time: 3 minutes

Apple this year is allegedly planning to enter a new product category, launching its first mixed reality headset. Rumors indicate that the upcoming headset will support both AR and VR technology, and that it will have features that will outshine competing products.

With the iPhone, iPad, and Apple Watch, Apple’s hardware and software led it to dominate those categories within a few short years after entering a new market, and it’s likely the same thing will happen with augmented and virtual reality.

4K Micro-OLED Displays

Apple is using two high-resolution 4K micro-OLED displays from Sony that are said to have up to 3,000 pixels per inch. Comparatively, Meta’s new top of the line Quest Pro has LCD displays, so Apple is going to be offering much more advanced display technology.

Micro-OLED displays are built directly onto chip wafers rather than a glass substrate, allowing for a thinner, smaller, and lighter display that’s also more power efficient compared to LCDs and other alternatives.

Apple’s design will block out peripheral light, and display quality will be adjusted for peripheral vision to cut down on the processing power necessary to run the device. Apple will be able to reduce graphical fidelity at the peripherals of the headset through the eye tracking functionality being implemented.

Apple Silicon Chip

Rumors suggest that Apple is going to use two Mac-level M2 processors for the AR/VR headset, which will give it more built-in compute power than competing products. Apple will use a high-end main processor and a lower-end processor that will manage the various sensors in the device.

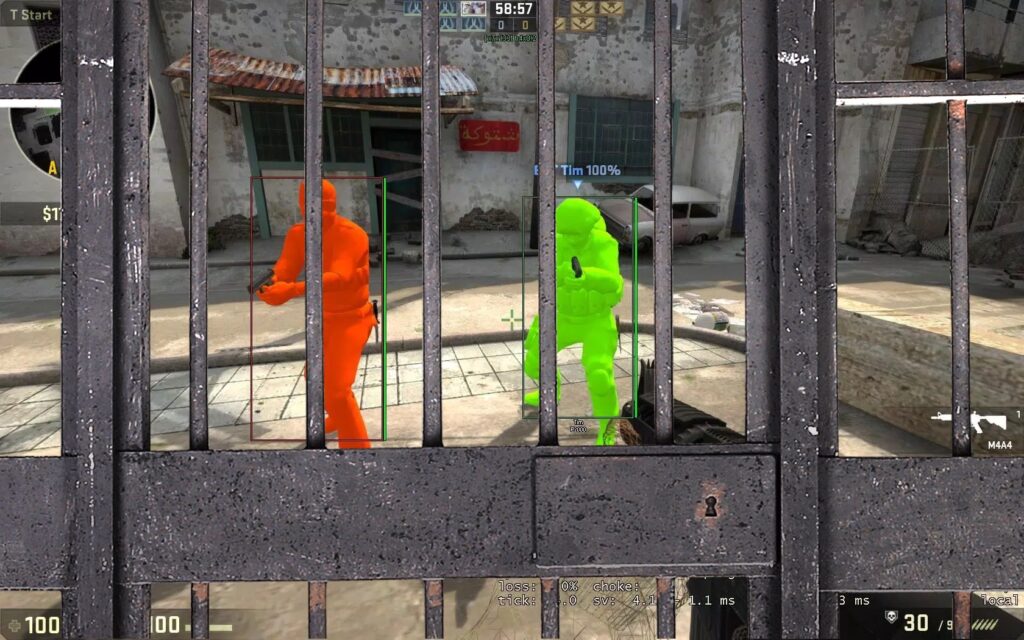

More Than a Dozen Cameras

Apple is outfitting its AR/VR headset with more than a dozen cameras, which will capture motion to translate real world movement to virtual movement. It is said to have two downward-facing cameras to capture leg movement specifically, which will be a unique feature that will allow for more accurate motion tracking.

The cameras will be able to map the environment, detecting surfaces, edges, and dimensions in rooms with accuracy, as well as people and other objects. The cameras may also be able to do things like enhance small type, and they’ll be able to track body movements.

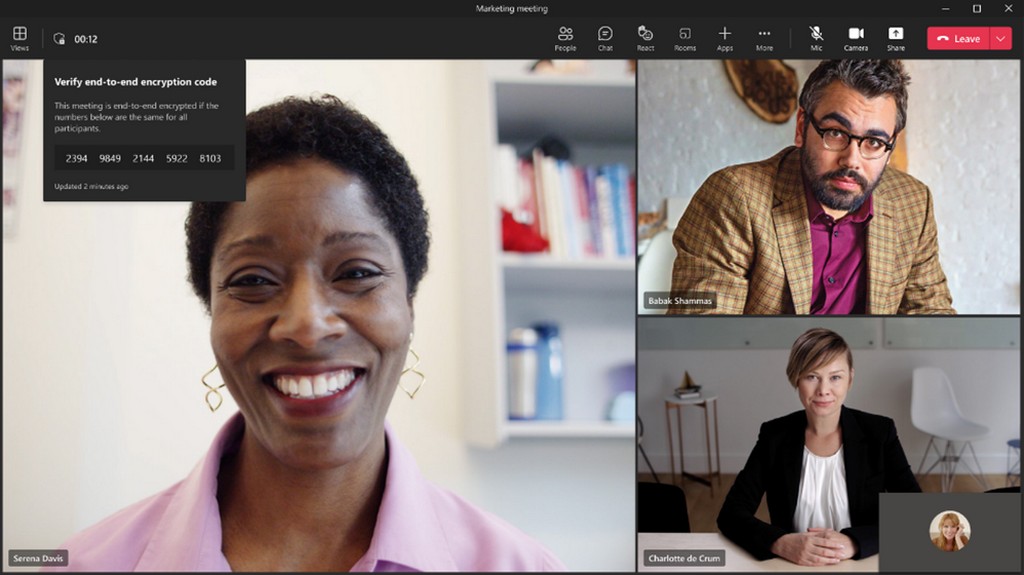

Iris Scanning

For privacy and security, the AR/VR headset will integrate an iris scanner that can read the pattern of the user’s eye, allowing for an iris scan to be used in lieu of a password and for payment authentication.

Iris scanning on the AR/VR headset will be akin to Face ID and Touch ID on the iPhone, iPad, and Mac. It could allow two people to use the same headset, and it is a feature that is not available on competing headsets like Meta’s new Quest Pro.

Thin and Light Design

Apple is aiming for comfort, and the AR/VR headset is rumored to be made from mesh fabric and aluminum, making it much lighter and thinner than other mixed reality headsets that are available on the market. Apple wants the weight to be around 200 grams, which is much lighter than the 722 gram Quest Pro from Meta.

Control Methods

3D sensing modules will detect hand gestures for control purposes, and there will be skin detection. Apple will allow for voice control and the AR/VR headset will support Siri like other Apple devices. Apple has tested a thimble-like device worn on the finger, but it is not yet clear what kind of input methods we’ll get with the new device.

Interchangeable Headbands

The mesh fabric behind the eyepieces will make the headset comfortable to wear, and it will have swappable Apple Watch-like headbands to choose from.

One headband is rumored to provide spatial audio like technology for a surround sound-like experience, while another provides extra battery life. It’s not clear if these will make it to launch, but headbands with different capabilities are definitely a possibility.

Facial Expression Tracking

The cameras in the AR/VR headset will be able to interpret facial expressions, translating them to virtual avatars. So if you smile or scowl in real life, your virtual avatar will make the same expression in various apps, similar to how the TrueDepth camera system works with Memoji and Animoji on the iPhone and iPad.

The aforementioned facial expression detection would allow the headset to read facial expressions and features, matching that in real time for a lifelike chatting experience. Apple is working with media partners for content that can be watched in VR, and existing services like Apple TV+ and Apple Arcade are expected to integrate with the headset.

Independent Operation

With two Apple silicon chips inside, the headset will not need to rely on a connection to an iPhone or a Mac for power, and it will be able to function on its own.