INTRODUCTION:

As the world’s leading Internet television network with over 160 million members in over 190 countries, our members enjoy hundreds of millions of hours of content per day, including original series, documentaries and feature films. Of course, all our all-time favourites are right on our hands, and that is where machine learning has taken it’s berth on the podium. This is where we will dive into Machine Learning.

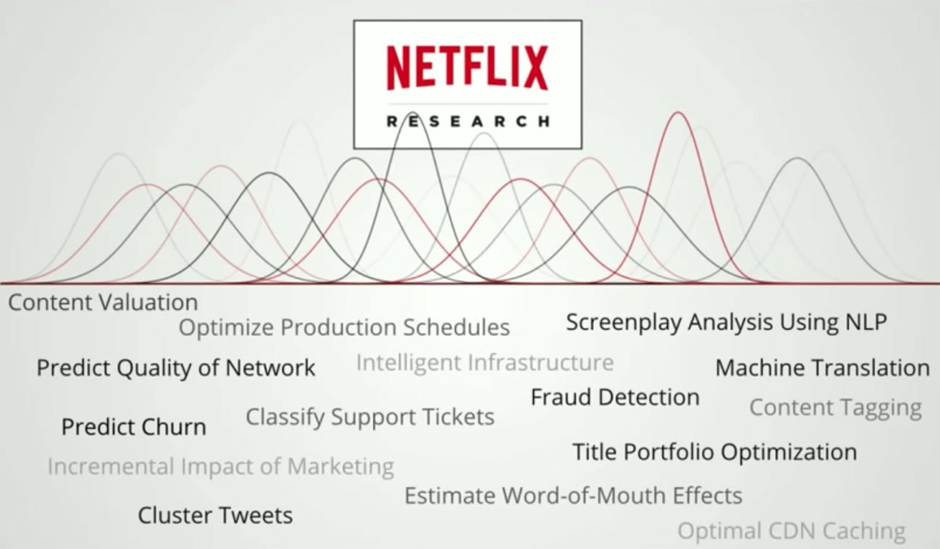

Machine learning impacts many exciting areas throughout our company. Historically, personalization has been the most well-known area, where machine learning powers our recommendation algorithms. We’re also using machine learning to help shape our catalogue of movies and TV shows by learning characteristics that make content successful. Machine Learning also enables us by giving the freedom to optimize video and audio encoding, adaptive bitrate selection, and our in-house Content Delivery Network.

I believe that using machine learning as a whole can open up a lot of perspectives in our lives, where we need to push forward the state-of-the-art. This means coming up with new ideas and testing them out, be it new models and algorithms or improvements to existing ones.

Operating a large-scale recommendation system is a complex undertaking: it requires high availability and throughput, involves many services and teams, and the environment of the recommender system changes every second. In this we will introduce RecSysOps a set of best practices and lessons that we learned while operating large-scale recommendation systems at Netflix. These practices helped us to keep our system healthy:

1) reducing our firefighting time, 2) focusing on innovations and 3) building trust with our stakeholders.

RecSysOps has four key components: issue detection, issue prediction, issue diagnosis and issue resolution.

Within the four components of RecSysOps, issue detection is the most critical one because it triggers the rest of steps. Lacking a good issue detection setup is like driving a car with your eyes closed.

The very first step is to incorporate all the known best practices from related disciplines, as creating recommendation systems includes procedures like software engineering and machine learning, this includes all DevOps and MLOps practices such as unit testing, integration testing, continuous integration, checks on data volume and checks on model metrics.

The second step is to monitor the system end-to-end from your perspective. In a large-scale recommendation system there are many teams that often are involved and from the perspective of an ML team we have both upstream teams (who provide data) and downstream teams (who consume the model).

The third step for getting a comprehensive coverage is to understand your stakeholders’ concerns. The best way to increase the coverage of the issue detection component. In the context of our recommender systems, they have two major perspectives: our members and items.

Detecting production issues quickly is great but it is even better if we can predict those issues and fix them before they are in production. For example, proper cold-starting of an item (e.g. a new movie, show, or game) is important at Netflix because each item only launches once, just like Zara, after the demand is gone then a new product launches.

Once an issue is identified with either one of detection or prediction models, next phase is to find the root cause. The first step in this process is to reproduce the issue in isolation. The next step after reproducing the issue is to figure out if the issue is related to inputs of the ML model or the model itself. Once the root cause of an issue is identified, the next step is to fix the issue. This part is similar to typical software engineering: we can have a short-term hotfix or a long-term solution. Beyond fixing the issue another phase of issue resolution is improving RecSysOps itself. Finally, it is important to make RecSysOps as frictionless as possible. This makes the operations smooth and the system more reliable.

To conclude In this blog post I introduced RecSysOps with a set of best practices and lessons that we’ve learned at Netflix. I think these patterns are useful to consider for anyone operating a real-world recommendation system to keep it performing well and improve it over time. Overall, putting these aspects together has helped us significantly reduce issues, increased trust with our stakeholders, and allowed us to focus on innovation.

BY: SHANNUL H. MAWLONG

https://research.netflix.com/research-area/machine-learning

References:

[1] Eric Breck, Shanqing Cai, Eric Nielsen, Michael Salib, and D. Sculley. 2017. The ML Test Score: A Rubric for ML Production Readiness and Technical Debt Reduction. In Proceedings of IEEE Big Data.Google Scholar

[2] Scott M Lundberg and Su-In Lee. 2017. A Unified Approach to Interpreting Model Predictions. In Advances in Neural Information Processing Systems 30, I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett(Eds.). Curran Associates, Inc., 4765–4774.