Although a lot has changed in a recent years when it comes to empowering women in pursuing professional careers, there is still plenty of space for much needed improvements. Researchers form University of Melbourne, in their study “Ethical Implications of AI Bias as a Result of Workforce Gender Imbalance” commissioned by UniBank, investigated the problem of AI favouring male job applications over female ones.

Researchers observed hiring patterns for three specific roles chosen based on gender ratios:

- Male-dominated – data analyst

- Gender-balanced – finance officer

- Female-dominated – recruitment officer.

Half of hiring panellists were given original CVs with genders of candidates showed, and the other half of panellists the exact same CVs but with genders changed (male to female and female to male, for example “Julia” was changed to “Peter” and “Mark” to “Mia”). The recruiters were asked to rank CVs, where 1 was being the best ranked candidate. Finally, the researchers created hiring algorithm based on the panellists decisions.

The results showed that CVs of women were scored up to 4 places lower than men’s ones, even thought they had the same skills. The recruiters claimed that they were judging based on experience and education.

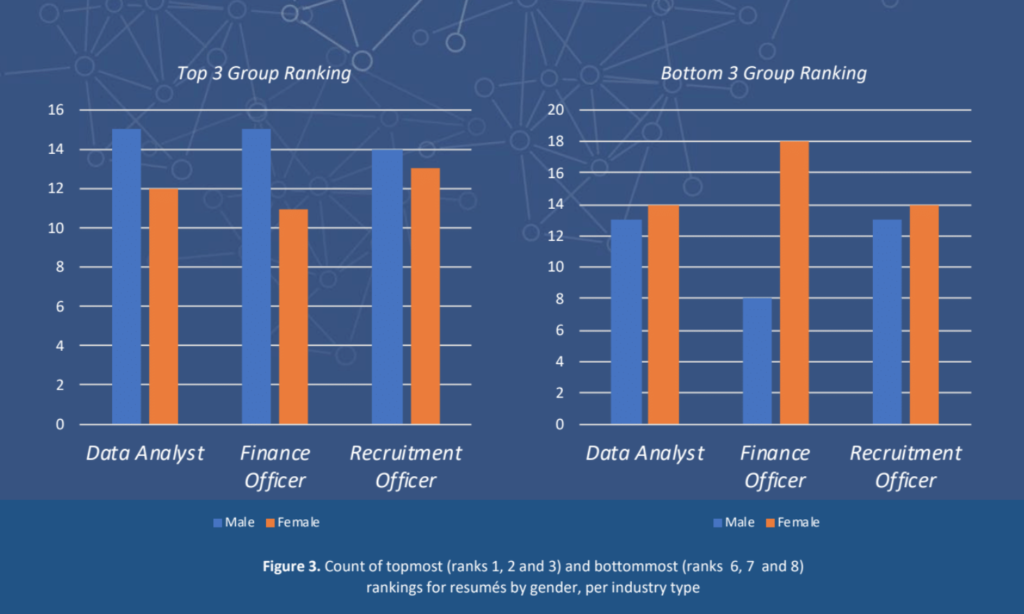

Male candidates were more often ranked in Top 3 for all jobs listed and female candidates were more often ranked in Bottom 3 for all jobs!

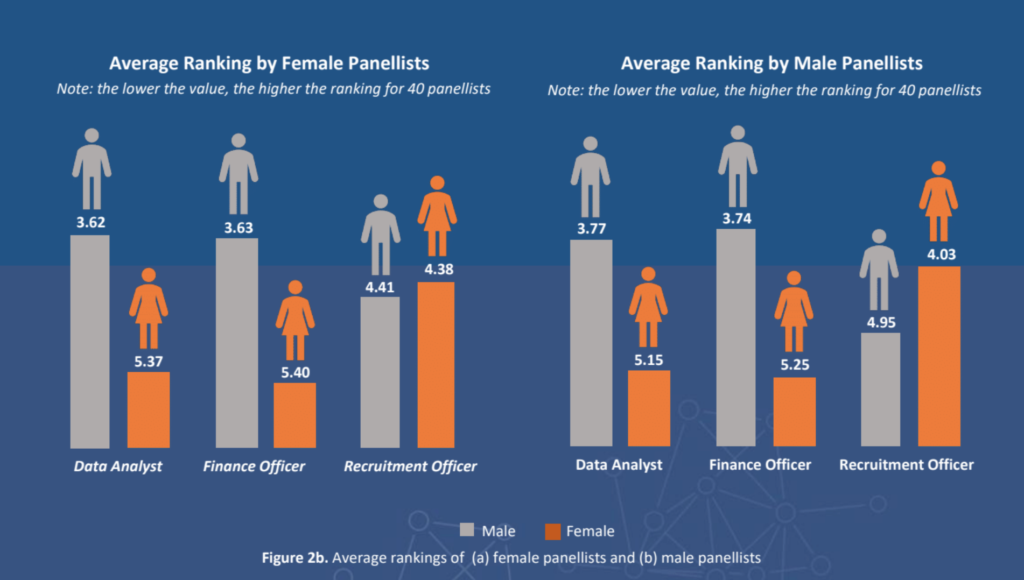

Male candidates were on average ranked higher for data analyst and finance officer position by both female and male recruiters! That proves that the bias was unconscious. However, in female-dominated role – recruitment officer, the bias worked also the other way round meaning female CVs on average ranked slightly better than male ones.

The researchers adopted a regression model of analysis which showed that candidate’s gender was one of the most critical factors in deciding who will get the job.

Researchers warn that human bias can be adopted by AI on a bigger scale. Mike Lanzing, UniBank’s General Manager, points out that “As the use of artificial intelligence becomes more common, it’s important that we understand how our existing biases are feeding into supposedly impartial models”.

Dr Marc Cheong, report co-author and digital ethics researcher from the Centre for AI and Digital Ethics (CAIDE), said that “Even when the names of the candidates were removed, AI assessed resumés based on historic hiring patterns where preferences leaned towards male candidates. For example, giving advantage to candidates with years of continuous service would automatically disadvantage women who’ve taken time off work for caring responsibilities”.

This study calls for immediate action to prevent AI from acquiring gender biases from people, which can be hard to eradicate later on, especially taking into account the constantly increasing use of AI in recruitment processes. The report suggests a number of measures that can be taken to reduce the bias, for example training programs for HR professionals. It is crucial to find the biases that are in our society before AI mimics them.

Sources