Reading Time: 3 minutes

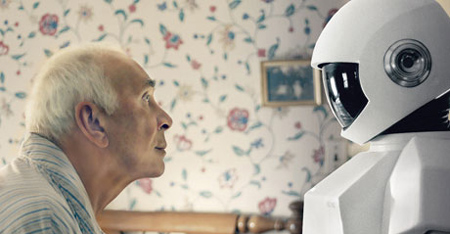

In the age of advanced artificial intelligence and GPT models, the line between machine-generated and human-created text seems to blur. With the remarkable capabilities of GPT to produce coherent and contextually relevant content, it raises the question: Can we reliably distinguish between text generated by GPT and text authored by humans?

The capabilities of GPT models have reached a point where they can produce remarkably human-like text across various domains, including literature, news articles, poetry, and even code. This advancement has sparked both fascination and concern regarding the authenticity and credibility of textual content proliferating online. One of the primary challenges in differentiating between human and machine-generated text lies in the sophistication of GPT models. These models, trained on vast amounts of data, possess a deep understanding of language patterns, semantics, and context. Consequently, they can mimic human writing styles and produce coherent narratives that closely resemble human-authored content.

There are various verifications methods that are used in algorithms trying to distinguish if the text is created by humans or generated by AI. The main approaches to verification are as follows: Statistical Analysis that involve analyzing features like word frequencies, sentence structures, and syntactic patterns and Pattern Recognition that involves training machine learning models to recognize patterns specific to GPT-generated text

But let’s check if the text recognition tools really works:

Most people think that they can verify the text by just asking chat GPT if the text was generated by that technology or not. However models like GPT have the ability to analyze and generate texts, but do not have the capability to fully verify whether a text was generated by GPT or written by a human. When that model is asked if the text was written by AI it will in most cases give an answer, but in almost every case that answer will be affirmative even if the text was written by human.

For instance, consider the excerpt from an article that was composed entirely by a human without the use of GPT technology. When inquired whether it was produced by an AI, the GPT model responds, “Yes, the text you mentioned appears to have been generated by an AI model.” However, if the provided text lacks coherence and meaningful content, such as “I go to buy watermelons because I don’t have anything else to do,” the output would be: “The text “I go to buy watermelons because I don’t have anything else to do” could potentially have been generated by AI, but it could also have been written by a human. It expresses a simple reason for going to buy watermelons and doesn’t exhibit complex language or thought patterns that would exclusively indicate AI generation. Therefore, it’s difficult to ascertain definitively whether it was produced by AI or authored by a human.”

Additionally, there are alternative platforms available for verifying plagiarism and discerning whether text originated from artificial intelligence models. For instance, while writing this article, I utilized the “Scibbr Free AI Content Detector,” one of the most widely used verifiers. Upon pasting the AI-generated text, the verifier indicated a 35% likelihood that the text was produced by AI. However, upon removing all commas and punctuation marks from the text, the probability swiftly plummeted to 0% which can be understood as saying that the text was generated by a human with 100% probability.

I decided to further test the capabilities of this website by pasting a snippet from an article published by CNN on the day of composing this post. The excerpt reads: “It’s the latest in a budding line of sci-fi themed press tour looks turned out by the actor and her longtime stylist Law Roach. During the Fendi show at Haute Couture Week in Paris last month, Zendaya was spotted in a meticulously carved V-shape, fringe that smacked of the camp, quirky 20th-century retro futurism that once defined our vision of tomorrow.” According to the model, there is a 78% likelihood that this text was generated by GPT technology. However, it seems highly improbable that a reputable news outlet like CNN relies on AI for its content creation.

In conclusion, the advanced GPT technologies for learning from word sequences, accessible on the Internet, have progressed significantly in recent years to the extent that they closely resemble human-generated text. While numerous platforms aim to offer verification services, their effectiveness often falls short, and presently, the most reliable form of verification remains human intuition.

On the other hand the fact that no one can recognize or verify if given text has been written or generated could lead to saving time of a person and reducing cost of work to produce interesting advertisement, product documentation or social media content created as quickly as never before, in a way that no one will ever recognize it has been generated.

Sources:

https://edition.cnn.com/2024/02/09/style/zendaya-dune-red-carpet-dressing/index.html

https://www.scribbr.com/ai-detector/

https://chat.openai.com

/2023/12/24/image/jpeg/89pjLt7gnIxx0nQu3WmdmL7JJteS9BvekQubbkDw.jpg)