Reading Time: 13 minutesAUTONOMOUS VEHICLES

(autonomous vehicles – part 1)

#AUTONOMOUS CARS, #SELF-DRIVING CARS, #INNOVATIONS, #FORD, #GOOGLE

Picture:URL:http://www.wired.com/wp-content/uploads/2015/11/IMG_6155-932×524.jpg or URL:http://www.wired.com/2015/11/ford-self-driving-car-plan-google/#slide-5

Picture:URL:http://www.wired.com/wp-content/uploads/2015/11/IMG_6689-932×524.jpg or URL:http://www.wired.com/2015/11/ford-self-driving-car-plan-google/#slide-6

Articles: (from WIRED – http://www.wired.com/category/transportation/)

- “Ford’s Skipping the Trickiest Thing About Self-Driving Cars”

Author: Alex Davies; Date of Publication: November 10, 2015

URL:http://www.wired.com/2015/11/ford-self-driving-car-plan-google/#slide-1

- “Ford’s Testing Self-Driving Cars In a Tiny Fake Town”

Author: Alex Davies; Date of Publication: November 13, 2015

URL:http://www.wired.com/2015/11/fords-testing-self-driving-cars-mcity/

- “A Google-Ford Self-Driving Car Project Makes Perfect Sense”

Author: Alex Davies; Date of Publication: December 22, 2015

URL:http://www.wired.com/2015/12/a-google-ford-self-driving-car-project-makes-perfect-

sense/

- “The Clever Way Ford’s Self-Driving Cars Navigate in Snow”

Author: Alex Davies; Date of Publication: January 11, 2016

URL:http://www.wired.com/2016/01/the-clever-way-fords-self-driving-cars-navigate-in-

snow/

- “Google’s Self-Driving Cars Aren’t as Good as Humans—Yet”

Author: Alex Davies; Date of Publication: January 12, 2016

URL:http://www.wired.com/2016/01/google-autonomous-vehicles-human-intervention/

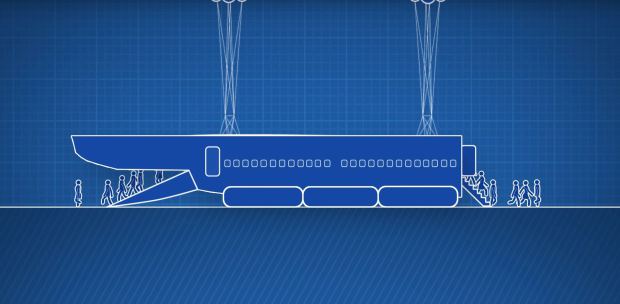

Nowadays, while focusing on the visions, strategies of companies operating within the automotive sector, it is possible to emphasize that more and more automobile manufacturers are deciding to develop specific technologies that could be implemented during the construction processes of the driverless, self-driving cars. After a deep analysis of the article “Ford’s Skipping the Trickiest Thing About Self-Driving Cars” it can be concluded that it is possible to distinguish two paths towards the automotive autonomy. Moreover, what has to be also emphasized here is the fact that the conventional – well known, “traditional” – automobile manufacturers are in the favor of a step-by-step approach, adding features one-by-one so humans cede control over time. Furthermore, it is also necessary to conclude that the group comprised of conventional automakers indicates that this approach gives them the opportunity to refine the technology, accustom consumers to the coming change, but also gives them the possibility to keep selling conventional cars in the meantime. Nevertheless, it is crucial to emphasize that Google perceives that as a complete nonsense and has decided to concentrate exclusively on fully autonomous vehicles that are not even equipped with a steering wheel. What is more, it is necessary to stress that Alex Davies, the author of articles concerning self-driving cars that are presented within this post, is of the opinion that Google – as well as Ford – sees no reason for the middle ground of semi-autonomy.

In the world of automotive engineering, automation can be categorized into six classifications, from Level 0 to Level 5. The lowest level has not been equipped with any autonomous technology. Nevertheless, each additional level adds progressively sophisticated technology up to Level 5, in which computers handle everything and the driver – passenger is strictly along for the ride.

The Ford Motor Company has not said much about its plans for the autonomous age, however, it is crucial to emphasize that the company is road-testing a fleet of self-driving Ford Fusion Hybrids in Dearborn, Michigan, and expects to expand beyond its hometown. The company’s special Fusions are loaded with cameras, radar, LIDAR, and real-time 3D mapping to see and navigate the world around them, which in this case includes concrete, asphalt, fake brick, and dirt. Furthermore, what is also important to present here is the information that Ford is the first automaker to test a fully autonomous car at Mcity, the little fake town built just for self-driving vehicles. Mcity, officially known as the University of Michigan’s Mobility Transformation Center, can be characterized as a 32-acre artificial metropolis intended for testing automated and connected vehicle technologies. The company aims to offer a fully-autonomous car in five years. What is more, according to Alex Davies, Ford decided to concentrate on fully-independent vehicles because it wants to avoid problem with semi-autonomous technology. It has to be also emphasized that the Ford Motor Company, like a vast majority of automakers, operates at Level 2 – its cars can be equipped with plenty of active safety systems like blind spot monitoring, parking assist, pedestrian detection, and adaptive cruise control, but the driver is always in charge. What is also necessary to present is the information that with Level 3 capability, the car can steer, maintain proper speed, and make decisions like when to change lanes, but always with the anticipation that the driver will take over if necessary. It is possible to conclude that Ford aims to focus directly on Level 4 – full autonomy, in which the car is capable of doing everything and human engagement is strictly optional. Moreover, it is also possible to conclude that Ford wants to skip Level 3 because it raises, contains the one of the greatest challenges with this technology: how to safely assign – shift – control from the computer to the driver, particularly in an emergency situations. The author of the article, Alex Davies, describes that as a balancing act, one that requires providing drivers with the advantage of autonomy – not having to pay attention – while assuring they are ready to take the wheel if the car confronts, encounters something it cannot handle.

What is also important here is the data presented by other automaker – German automobile manufacturer – Audi, which says its tests show it takes an average of 3 to 7 seconds, and as long as 10, for a driver to snap to attention and take control, even with flashing lights and verbal warnings. A lot can happen in that time (a car traveling 60 mph covers 88 feet per second) and automakers have different concepts for solving this issue. Audi has decided to implement an elegant, logical human machine interface. Moreover, Volvo, a Swedish premium automobile manufacturer, is creating its own HMI, and says it will accept full responsibility for its cars while using the autonomous mode.

Nevertheless, both Google and Ford are withdrawing from this problem. “Right now, there is no good answer, which is why we are kind of avoiding that space,” stresses Dr. Ken Washington, the automaker’s VP of research and advanced engineering. “We are really focused on completing the work to fully take the driver out of the loop.” Even though the Ford Motor Company has not uncovered much about its capacities – how many cars are used within the test fleet, or how much ground they have covered –, Washington is of the opinion that a fully autonomous car within five years is reasonable, if work on the software controlling it progresses well. What is crucial to emphasize here is the fact that Ford would limit the deployment process of their autonomous vehicle only to those regions where it will be able to provide the extremely detailed maps self-driving cars will require. Furthermore, what is also important to present is the information that, currently, the American multinational automaker is using its self-driving Fusion hybrids to make its own maps. What is also important to stress is the information that it remains to be seen whether that is achievable at a large scope, or if Ford will work with a company like TomTom or Here. Dr. Ken Washington, Ford’s VP of research and advanced engineering, admits that the company’s strategy is pretty similar to Google’s, however it bases, it is comprised of on two crucial differences. First, Ford already builds cars, and will continue developing and improving driver assistance features even as it works on Level 4 autonomy. Second, Ford has no project concerning selling wheeled pods in which people are simply along for the ride. “Drivers” will always have the opportunity to take the wheel. “We see a future where the choice should be yours,” Washington concludes.

Nevertheless the fact that the “self-driving cars age” has become inevitable, it is still possible to distinguish several problems to solve before the involved companies will enable the official deployment of these vehicles. It has to be stressed that one of the greatest challenges here is getting the robots to handle bad weather. Furthermore, what is also crucial to emphasize is the information that all the autonomous cars that are now in the phase of development use a variety of sensors to analyze the world around them. It is possible to conclude that the Radar and LIDAR devices perform most of the work – looking for other cars, pedestrians, and other obstacles –, while cameras typically read street signs and lane markers. Alex Davies, the author of the article “The Clever Way Ford’s Self-Driving Cars Navigate in Snow” stresses that during the bad weather conditions it would be rather impossible to scan the environment for those devices – “if snow is covering a sign or lane marker, there is no way for the car to see it”. Humans typically make their best guess, based on visible markers like curbs and other cars. Ford says it is teaching its autonomous cars to do something similar. As it was emphasized above, the Ford Motor Company, similarly to other players in this area, is creating high-fidelity 3D maps of the roads its autonomous cars will travel. What is more, what has to be also presented is the information that those maps contain specific data like the exact position of the curbs and lane lines, trees and signs, along with local speed limits and other relevant rules. It is possible to conclude that the more a car learns about a region, zone, the more it can concentrate its sensors and computing power on detecting temporary obstacles – people and other vehicles – in real time. Furthermore, it is also crucial to emphasize that those maps have another advantage: the car can implement them to figure out, within a centimeter, where it is at any given moment. Alex Davies gives the following example to illustrate this point: “The car can’t see the lane lines, but it can see a nearby stop sign, which is on the map. Its LIDAR scanner tells it exactly how far it is from the sign. Then, it’s a quick jump to knowing how far it is from the lane lines.” Moreover, Jim McBride – Ford’s head of autonomous research – is of the following opinion: “We’re able to drive perfectly well in snow, we see everything above the ground plane, which we match to our map, and our map contains the information about where all the lanes are and all the rules of the road.” It is also necessary to stress that the Ford Motor Company claims it tested this ability in real snow at Mcity. Nevertheless the fact that the idea of self-locating by deduction may not be exclusive to Ford, this automaker is the first one to publicly show it can use its maps to navigate on snow-covered roads. However, it has to be emphasized that the implementation of this technology has not yet solved all the problems with autonomous driving in bad weather. Falling rain and snow can interfere with LIDAR and cameras, and safely driving requires more than knowing where you are on a map – you also need to be able to see those temporary obstacles.

Picture:URL:http://www.wired.com/wp-content/uploads/2016/01/Snowtonomous_4693_Story-art.jpg or URL:http://www.wired.com/2016/01/the-clever-way-fords-self-driving-cars-navigate-in-snow/

(What has to be also emphasized is the information that) According to the article “A Google-Ford Self-Driving Car Project Makes Perfect Sense” (Alex Davies; date of publication: December 22, 2015) as well as Yahoo! Autos Report, Ford and Google plan to create a joint venture to work on self-driving cars. Furthermore, it has to be stressed that the setup would use Google’s very sophisticated autonomous software in Ford cars, playing to each company’s strength – Google’s fleet of self-driving cars has logged more than 1.2-million miles in the past few years, and covers 10,000 more each week, while Ford makes and sells millions of cars each year. “If it is true, it makes perfect sense,” Davies emphasizes. What is more, Alex Davies is of the opinion that it is reasonable that Google would want to cooperate with an established – experienced – automaker, because the company has never needed to think about the tens of thousands of parts that must come together following incredibly strict federal guidelines, but also about the processes that require huge plants as well as specific competencies. It is possible to conclude that Ford has been doing all that for a century, so it knows a lot that Google does not. Moreover, it is important to stress that Ford has started to talk publicly about its autonomous driving research two years ago, including its interest in finding new partners. It is also necessary to emphasize that Mark Fields, the CEO of the Ford Motor Company, said that the company is actively looking to work with startups and bigger companies, and that that work is a priority for him. What is also crucial to stress here is the fact that this cooperation would make sense, because – as it was presented above – both Google’s and Ford’s approaches to autonomous driving are remarkably similar. The vast majority of automakers plans to deploy self-driving technologies progressively, adding features one-by-one so drivers cede control over time. However, what is also crucial to emphasize is the fact that Google decided to develop, to construct a car with no steering wheel, no pedals, and no role for the human other than sitting still and behaving while the car does the driving. Automobile manufacturers like Mercedes, Audi, GM, and Tesla plan to offer features that let the car do the driving some of the time, using the human as backup within the emergency situations. Nevertheless, due to the fact that this “level” of autonomous driving covers the issue of transferring safely the control between robot and human – particularly during the dangerous situations –, Google as well as Ford have decided to avoid that part. Moreover, Alex Davies is of the opinion that it is very unlikely that the Ford Motor Company will be contented with providing nothing but wheels, motors, and seats, while Google does all the relevant work. What is more, Bill Ford, the executive chairman and former CEO of the Ford Motor Company, stressed that the thing he does not want to witness is Ford reduced to the role of a hardware subcontractor for companies doing the more creative, innovative work. Furthermore, Dr. Ken Washington, Ford’s VP of research and advanced engineering, admitted that he wants the automaker to build its own technology. “We think that’s a job for Ford to do.”

However, what is also necessary to present – while focusing on the issue of cooperation between Ford and Google – is the information that “Google’s Self-Driving Cars Aren’t as Good as Humans (Yet)”. Google has recently announced that its engineers assumed control of an autonomous vehicle 341 times between September 2014, and November 2015. That may sound like a lot, however it has to be presented that Google’s autonomous fleet covered 423,000 miles in that time. Furthermore, it has to be stressed that Google’s cars have never been at-fault in a crash, and Google’s data shows a meaningful drop in “driver disengagements” over the past year. Moreover, what is also crucial to stress, while focusing on the Google’s cars reliability, is the information that Google’s rate of disengagements is also far lower than those declared by other companies testing autonomous technology in California, including Nissan, VW, and Mercedes-Benz. It is crucial to emphasize that of the 341 instances where Google engineers took the wheel, 272 derived from the “overall stability of the autonomous driving system” – things like communication and system failures. What is also significant to present here is the fact that Chris Urmson, the project’s technical lead, does not find this issue very troubling, because – as he states – “hardware is not where Google is focusing its energy right now”. Moreover, it is also possible to conclude the Google team is more concerned with how the car makes decisions, and will make the software and hardware more robust before entering the market. However, while focusing on the remaining 69 takeovers it is necessary to emphasize that they concern more important issues – it is possible to conclude that they are “related to safe operation of the vehicle,” meaning those times when the autonomous car might have made a bad decision. It is also important to stress that due to the Google’s simulator program it is impossible to read openly any reports regarding these incidents – if the engineer in the car is not fully convinced that the AI will perform the appropriate action, she will take control of the vehicle, later, back at headquarters (Mountain View), she will transmit all of the car’s data into a computer and the team will see what the car would have done had she not taken the wheel. According to data Google recently has shared with the California Department of Motor Vehicles (DMV), 13 of those 69 incidents would have led to crashes. What has to be also stressed is the information that Google’s cars have driven 1.3 million miles since 2009. It is possible to conclude that they can identify hand signals from traffic officers and “think” at speeds no human can match. Nevertheless, it is crucial to emphasize that the Google cars have been involved in 17 crashes, but have never been at fault. Moreover, it has to be presented that Google had previously predicted the vehicles will be road-ready by 2020. At this point, the team usually is not able to solve problems with a rapid adjustment to the code. It has to be stressed that currently the challenges are far more sophisticated as well as complicated. Chris Urmson presents the following example: “In a recent case the car was on a one-lane road about to make a left, when it decided to turn tight instead – just as another car was using the bike lane to pass it on the right.”, “Our car was anticipating that since the other car was in the bike lane, he was going to make a right turn.”, “The Google car was ahead and had the right of way, so it was about to make the turn. Had the human not taken over, there would have been contact.” It is possible to conclude that avoiding repeating so fringe case is not easy, but Google informs that its simulator program “executes dozens of variations on situations the team has encountered in the real world,” which facilitates them test how the car would have react under slightly diverse circumstances. Nevertheless, it is crucial to emphasize that Google is getting better. It has to be stressed that the disengagement numbers have dropped over the past year. Eight of the 13 crash-likely incidents took place in the last three months of 2014, over the course of 53,000 miles. The other five occurred in the following 11 months and 370,000 miles. Assuming those 13 incidents would have ended with a crash that stands for one accident every 74,000 miles. “Good, but not as good as humans,” Urmson concludes. It has to be also presented that according to new data from the Virginia Tech Transportation Institute, Americans log one crash per 238,000 miles. It is possible to conclude that before bringing its technology to the market Google must make its cars safer than human drivers (who cause more than 90 percent of the crashes that kill more than 30,000 people in the US every year). “You need to be very thoughtful in doing this, but you don’t want the perfect to be the enemy of the good.” “We need to make sure we can get that out in the world in a timely fashion,” Urmson stresses. What is crucial to emphasize is the information that the Google’s disengagement numbers must keep dropping. The downward trend will maintain, Urmson says, but as the team begins testing in tougher conditions, like bad weather and busier urban areas, it will be possible to notice sporadic upticks. “As we push the car into more complicated situations, we would expect, naturally, to have it fail,” Urmson says. “But overall, the number should go down.”

I would like to stress that the broadly defined automotive autonomy is to be one of the most interesting topics of the nearest future. In my opinion, both of the paths towards achieving the Level 4 as well as Level 5 of automation are fulfilled with sophisticated solutions and processes. I have to admit that the second path – the path selected by the two companies that have decided to avoid the problem of semi-autonomous technology, Google and Ford, is even more fascinating. I would also like to emphasize that even if the cooperation between those two companies will not be confirmed, both Google and Ford will be within the group comprised of the most powerful automobile manufacturers that will compete in the race for full automation, and within five years’ time we will have the opportunity to test how it is to use our vehicles without the necessity to drive. Furthermore, it is also crucial to remember about the two of the main elements enabling self-driving cars to ride – about the projects regarding the high-fidelity 3D maps that together with Radar and Lidar devices will be deployed in the autonomous vehicles. I believe that the systems composed of Lidar scanner and 3D maps will provide us the most accurate artificial drivers in terms of broadly defined safety. All things considered, I would like to emphasize that even though the age of autonomous vehicles is on its way, it is still possible to distinguish several problems to solve before we will be only acting – voluntarily – as passengers (behavior of the system at the high speeds, issue of trust, bad road conditions, and emergency situations).

Picture:URL:http://www.wired.com/wp-content/uploads/2015/09/Screen-Shot-2014-12-22-at-2.10.41-PM.jpg or URL:http://www.wired.com/2016/01/google-autonomous-vehicles-human-intervention/

MZ