Although computer-based vision-based hand-tracking is becoming a viable solution for AR and VR headsets, the problem of optical-tracking accuracy remains unfull. AuraRing fits into that. AuraRing is the new electromagnetic tracking system, developed by researchers at Washington University, provides a combination of high-resolution detection and low power consumption to support AR, VR and wider uses for wearables.

AuraRing consists of two pieces. The first is a finger-sized index ring with a wire coil that coils around a 3D printed circle 800 times, using a tiny battery to produce a magnetic field oscillating with a volume just of 2.3 milliwatts. The ring’s five degrees of freedom (DoF) orientation at any given time is determined with 3 sensor coils from a wristband. The machine resolution is 0,1 mm and the dynamic accuracy is 4,4 mm, far better than is obtained by tracking the external camera of the same finger.

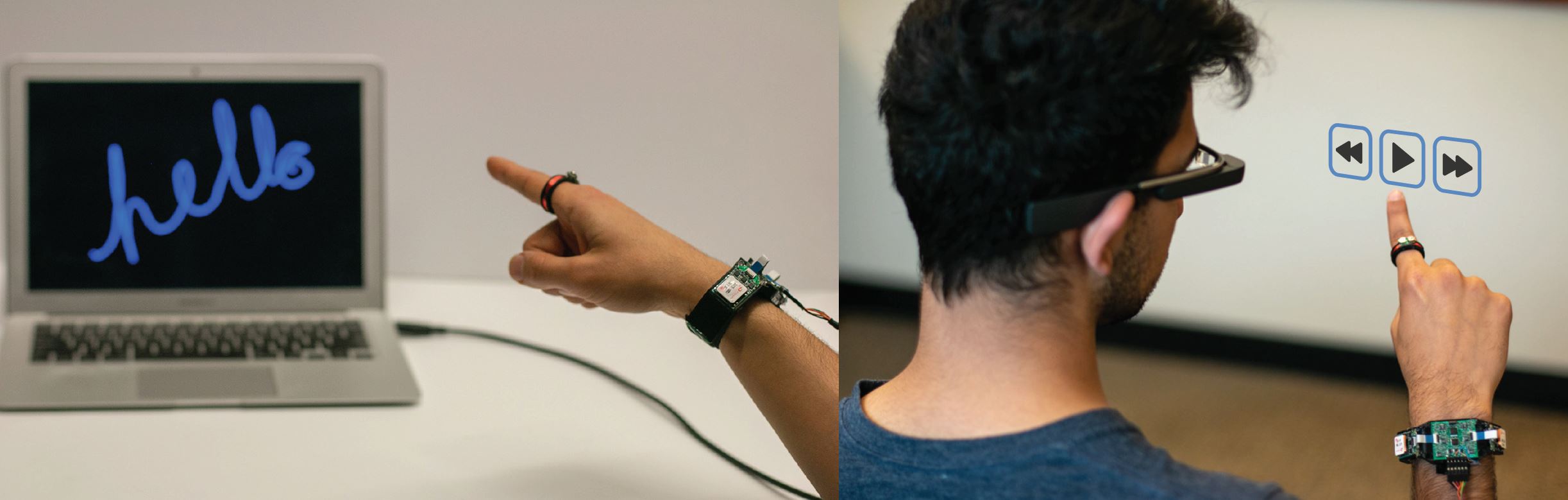

With these sensing rates, a finger can be used to write legibly in the air without a contact pad and to provide feedback taps and flip movements that can monitor a screened computer from a distance. Thanks to the implementation of magnetic sensing, researchers suggest that even a visually darkened finger can send text messages, interact with device user interfaces and play games. In fact, AuraRing is designed to work with several finger and hand sizes.

While the illustration of the researchers shows a conceptual wristband, the idea is to simply attach the wrist sensors to a smartwatch or to another computer wearing a wrist, so that the consumer can incorporate finger sensing precision in the handle if appropriate. If you want the additional benefits, just put it on your bracelet, then you will continue to have all of the potentials of today’s smartwatches,’ explained co-leading investigator Farshid Salemi Parizi.

A video showing AuraRing in action shows how it can be used on computer or virtual smartphone displays for reconstruction, handwriting recognition, and input selection. The research was funded by the Reality project, Twitter, Futurewei, and Google of the University of Washington. It is worth noting that Microsoft, headquartered in Redmond, Washington deals with a wider range of sensors for similar purposes on its own responsive smart ring.

References:

- https://venturebeat.com/2020/02/03/researchers-show-auraring-a-low-power-5dof-gesture-ring-and-wristband

- https://www.sciencedaily.com/releases/2020/02/200203141505.htm

- https://youtu.be/j6p9zqXpTF0

- https://www.eurekalert.org/multimedia/pub/223266.php?from=454497

Uber’s self-driving cars will be in the streets of Washington, DC, shortly after, announcing that the driving firm will gather data to support its autonomous vehicle fleet growth. Nevertheless, the engines are not self-operating. Alternatively, it will be driven by human drivers to launch, gather mapping data and catch driving scenarios replicated by Uber’s engineers.

Uber’s self-driving cars will be in the streets of Washington, DC, shortly after, announcing that the driving firm will gather data to support its autonomous vehicle fleet growth. Nevertheless, the engines are not self-operating. Alternatively, it will be driven by human drivers to launch, gather mapping data and catch driving scenarios replicated by Uber’s engineers.

:format(webp):no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/19585588/sony_vision_s_9838.jpg) The next big step for smartphone makers has entered the TV market over the last several years. Xiaomi does this so do Apple, Htc and OnePlus. Sony appears to have taken a turning point, however, by introducing its first hybrid car model, shocking everybody. The announcement was made at the upcoming CES in Las Vegas in 2020.

The next big step for smartphone makers has entered the TV market over the last several years. Xiaomi does this so do Apple, Htc and OnePlus. Sony appears to have taken a turning point, however, by introducing its first hybrid car model, shocking everybody. The announcement was made at the upcoming CES in Las Vegas in 2020.:format(webp):no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/19585605/sony_vision_s_9927.jpg)

:format(webp):no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/19585601/sony_vision_s_9913.jpg)